The program is still in development, but the team intends to integrate it with its existing artist protection tools.

Researchers at the University of Chicago have created a tool that allows artists to “poison” their digital art in order to prevent developers from training AI systems on their work.

Stay in the know on crypto by frequently visiting Crypto News Today

The technology, dubbed “Nightshade” after the plant family notorious for its deadly berries, changes photos in such a way that their inclusion contaminates the datasets used to train AI with false information.

Nightshade, according to MIT’s Technology Review, alters the pixels of a digital image in order to fool an AI system into misinterpreting it. As an example, Tech Review mentions convincing AI that an image of a cat is actually an image of a dog, and vice versa.

The AI’s capacity to create accurate and sensitive outputs would theoretically be harmed as a result. In the preceding scenario, if a user requested an image of a “cat” from the poisoned AI, they might instead receive a dog labeled as a cat or an amalgamation of all the “cats” in the AI’s training set, including those that are actually photographs of dogs edited by the Nightshade tool.

According to Vitaly Shmatikov, a professor at Cornell University, who reviewed the paper, researchers “don’t yet know of robust defenses against these attacks.” The result is that even robust models like OpenAI’s ChatGPT may be vulnerable.

Advertisement

Follow GappyCoin PreSale on Twitter, and ReCap for information and more.

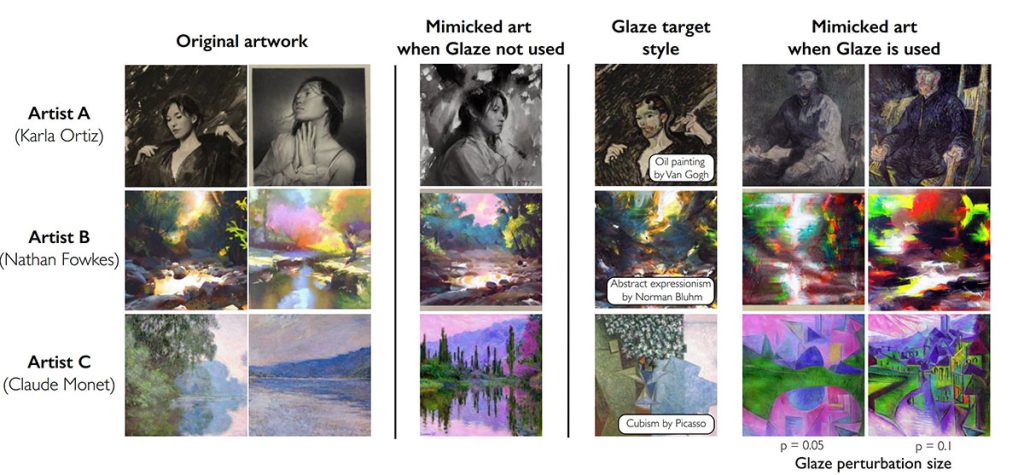

Professor Ben Zhao of the University of Chicago leads the Nightshade research team. The new technology is basically an enhancement of Glaze, their existing artist protection program. They previously created a mechanism for an artist to conceal, or “glaze,” the style of their artwork.

A charcoal portrait, for example, may be coated to appear like modern art to an AI system.

According to Technology Review, Nightshade will eventually be integrated into Glaze, which is now free for web usage or download from the University of Chicago’s website.![]()

If this article brought you clarity, insight, or value—support the work that made it possible.

At CryptoCaster, we report on Web3, crypto markets, and institutional finance with no billionaire owners, no shareholders, and no hidden agenda. While mainstream media bends toward Elon Musk, BlackRock, and JPMorgan narratives, we stay focused on what matters: truth, transparency, and the public interest.

We don’t just cover the headlines—we investigate the power structures behind them. From FTX and Ripple to the quiet push for CBDCs, we bring fearless reporting that isn’t filtered by corporate interests.

CryptoCaster is 100% paywall-free. Always has been. To keep it that way, we depend on readers like you.

If you believe independent crypto journalism matters, please contribute—starting at just $1 in Bitcoin or Ether. Wallet addresses are below.

Your support keeps us free, bold, and accountable to no one but you.

Thank you,

Kristin Steinbeck

Editor, CryptoCaster

Please Read Essential Disclaimer Information Here.

© 2024 Crypto Caster provides information. CryptoCaster.world does not provide investment advice. Do your research before taking a market position on the purchase of cryptocurrency and other asset classes. Past performance of any asset is not indicative of future results. All rights reserved.

Contribute to CryptoCaster℠ Via Metamask or favorite wallet. Send Coin/Token to Addresses Provided Below.

Thank you!

BTC – bc1qgdnd752esyl4jv6nhz3ypuzwa6wav9wuzaeg9g

ETH – 0x7D8D76E60bFF59c5295Aa1b39D651f6735D6413D

CRYPTOCASTER HEATMAP